Networking for the future

Networking Needs

The Next Gen networking needs can be broadly listed down as the following

- · More network layer 2 partitions

- · Programmatically and dynamically shape the traffic

- · Dynamically control the flow of traffic

- · Automated provision of the networking resources

- · QoS extensibility

- · Security of the network

We also need to keep into mind that the IPv4 pool has officially exhausted, so these needs are applicable to both IPv4 (existing networks) and IPv6.

In order to full fill these needs there is not a single technology but a group of them which can work alongside each other. But the needs clearly mention that there needs to be software involved in the networking. Hence the umbrella term SDN – Software Defined Networking is coined.

Software Defined Networking

Traditionally the routers/switches have had proprietary software inside them which took the decisions to forward packets and where to forward them. These software’s were proprietary and were controlled by companies who created them (example Cisco IOS). In order to explain the evolution process, let us take an example of a switch, which by far is the most critical component in the Data center environment.

Evolution of NETWORKING Devices

In the oldest switches, all the activities (or most of it) were done in software. There was a single operating system and that controlled packet flow and all activities of the switch itself. This worked very well, but it was not fast enough and hence the use of ASIC’s was started. Let’s take a look at the very high level block diagram of the control points of the switches.

Switch Block Diagram: Software Only

After the use of high speed ASIC’s, the switches offloaded the mundane work to the ASIC’s and only the work that needed some processing was sent to the Operating System

Switch Block Diagram: ASIC + Software

This method was successful, with the improvements in the embedded systems and the capability of ASIC’s more and more functions could be now done in hardware rather in software. The next requirement was the high port density for datacenters and that could be given by using the chassis architecture and offloading all the brain on to different cards (The supervisor Modules).

This was the time, when we could separate the Data-plane and Control-Plane

Where the supervisor module (or equivalent controller card) became the brains and used to keep track of the switching decisions, ACL’s, etc.

The Chassis backplane that is shown the diagram connects everything to everything within a switch. Having architecture like the one shown above enabled devices to be very high speed and gave rise to technologies like express forwarding.

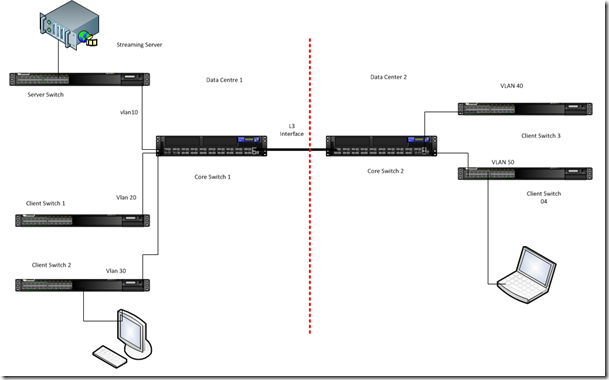

However up to this point, the brains of a device remained in the device. The next technological innovation was the Extending of the fabric (Switch Backplane) not only across a device which was achieved in using stacking, but also across racks and sometimes datacenters. At this point in time, Virtual Chassis (Juniper) or Fabric Extender (Cisco Nexus) came into picture. In this the backplane of several devices are connected using cables (Proprietary or Ethernet) and then the top device acts as the brain and others as mere line cards.

This kind of switches are called Fabric Switches (Off course it is a little complicated than the diagram shown above, nevertheless it conveys the idea).

Blow the brains out, Literally!!!

The need of hour in networking is to take the brain out of the system and create an abstraction layer. This is required as there are multiple different devices and multiple different vendors. Having already moved the controller out of the line card in a device meant that any device could function as the controller if it had the right tools. That is the basis of the software defined networking.

In SDN, the devices will have capability of accepting another device as their brain (controller) and the decisions can be left to them. In order to help in this task, a protocol was needed and in came “Open Flow”

The SDN allows the device to make a routing switching decision on a wide variety of parameters, dynamically and not controlled just by routing/switching protocols and can be completely programmed based on the requirement of the enterprise.

So, in this case, the server (or an Appliance) runs the open flow controller. The open flow switch sends information to the controller continuously (like Net Flow). The open flow controller on the basis of the information takes decisions.

We can configure it as per need and requirement. In normal cases, the line card sends the first packet to the routing / switching engine, which does the lookup and then tell the line card which port to forward it out of. The further packets of the same flow are sent in the same way but without contacting the routing engine. In the case of the open flow, the routing/switching engine can be the open flow controller and since the open flow controller takes the decision on many more parameters, it can do a very dynamic routing, load balancing over links, so on and so forth.

The open flow also allows to programmatically changing the configuration of the devices, and hence provisioning and other aspects are covered by the same protocol. So, the network is controlled by the same server that it is providing the network to, sounds ironical doesn’t it? But you can imagine the kind of ramifications that can happen. Single software will be able to orchestrate, manage, troubleshoot and optimize the network.

What’s more the Open flow is a standard protocol that works the same across all the vendor devices, hence the configuration and management of the devices can be abstracted and become vendor independent for most standard features.

Vendors supporting open flow

All the major vendors are supporting Open Flow. Juniper JunOS has been having the programming capability for a long time. HP has released Open flow compatible switches, which will work with open source controllers and NEC controllers. Extreme, NEC and Cisco also support the Open Flow. Cisco has called the program Open Network Environment (ONE) and is working on API access in its NX-OS running on the Nexus devices.

Open Flow components

As we saw that the open flow needs an open flow compatible switch and also an open flow controller. Here is a list of hardware and software switches which support open flow

Switches

- ·HP 5400/6600 – Software Version K.15.05.5001

- ·IBM G8264

- · Pronto

- · NEC PF5240

- · NetFPGA Switch

- · Open vSwitch

- · Open WRT

Controllers

The following open source controller software’s are available to as the flow controller

- · NOX

- · Beacon

- · Helios

- · BigSwitch

- · SNAC

- · Maestro

Please note that Open flow is right now in an experimental stage and will need some time for it to be baked and the features to be available.

Constraints

Open flow and SDN are excellent, but there are things that it can’t do. It cannot virtualize the devices, it cannot make them multi-tenanted, and device vendors need to do that on the devices themselves, so we will still need the vendors to do that. Once they have done it (Like VRF’s) these can be managed by Open flow without any issues. This goes to say, that open flow or the SDN will need to work with other protocols and technologies to be close to what we are looking for. The open flow will solve all but not solve the network partition requirement that we looked at the beginning.

Partition network into multiple layer 2 boundaries

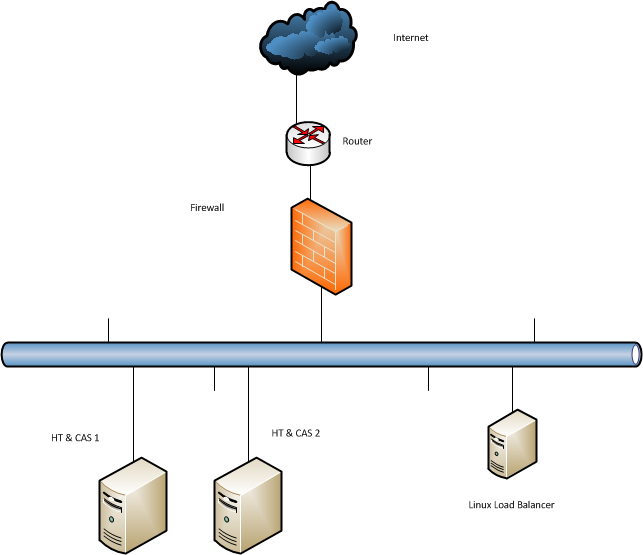

With vendors going to multitenant model for their cloud computing offering, they need a way to securely partition the different tenants. The way it is normally done by using VLAN’s but the VLAN tag is only 12 bit long and so the maximum can be 4096. Take aside a few reserved VLAN’s and you have even lesser.

Also the VLAN’s cannot traverse the layer 3 boundary and in order to have multiple datacenters we will be looking for this. The way it is being traditionally built is the layer 2 links between the datacenters which are no longer feasible.

In order to solve this problems a new VLAN protocol called the Extended VLAN is devised

As the name VXLANs (Virtual eXtensible LANs) implies, the technology is meant to provide the same services to connected Ethernet end systems that VLANs do today, but in a more extensible manner. Compared to VLANs, VXLANs are extensible with regard to scale, and extensible with regard to the reach of their deployment.

The VXLAN draft defines the VXLAN Tunnel End Point (VTEP) which contains all the functionality needed to provide Ethernet layer 2 services to connected end systems. VTEPs are intended to be at the edge of the network, typically connecting an access switch (virtual or physical) to an IP transport network. It is expected that the VTEP functionality would be built into the access switch, but it is logically separate from the access switch. The figure below depicts the relative placement of the VTEP function.

Each end system connected to the same access switch communicates through the access switch. The access switch acts as any learning bridge does, by flooding out its ports when it doesn’t know the destination MAC, or sending out a single port when it has learned which direction leads to the end station as determined by source MAC learning. Broadcast traffic is sent out all ports. Further, the access switch can support multiple “bridge domains” which are typically identified as VLANs with an associated VLAN ID that is carried in the 802.1Q header on trunk ports. In the case of a VXLAN enabled switch, the bridge domain would instead by associated with a VXLAN ID.

So with this two technologies we will be able to achive the requirements that we have mentioned in the star of the document.

This comment has been removed by a blog administrator.

ReplyDelete